Introducing Python ML

If you're looking to build AI powered applications, you've probably considered using Python. And in case you haven't, it's probably time you did. We at Appwrite have thought about it, and our conclusion is simple. To improve the experience of building AI powered apps with Appwrite, a new runtime needs to emerge to cater to AI specific needs.

We're excited to present Appwrite's newest Function runtime, Python ML. The new runtime has been tailored and optimised for machine learning use cases, saving you a lot of hassle and time, making building AI powered applications a whole lot easier.

Python and Machine Learning

Python's simplicity and it's rich ecosystem of data science libraries have made it the preferred languages for machine learning research and implementation. The jewels of this ecosystem are libraries and frameworks specifically designed for machine learning, such as TensorFlow, PyTorch, scikit-learn, Keras, and NLTK. These libraries provide pre-built functions and algorithms for common machine-learning tasks, enabling developers to build and train models quickly and efficiently.

Why build a new runtime

Appwrite's Python runtime has been one of the most popular Function runtimes. However, it has a limitation with machine learning use cases. The image was built on an Alpine Docker image. We chose Alpine because it's images offer a minimal and clean distribution of Linux. The current Python runtime has a compressed size of under 22MB! Unfortunately, Alpine doesn't support installing machine learning libraries like numpy and Tensorflow due to the absence of some core libraries and build tools.

We decided to build a new runtime image to overcome this limitation and at the same time benefit from all the hardware-specific optimisations required for running models at scale. The Python ML runtime will allow you to install all the libraries and tools required for Machine Learning with ease. While we procure and build a world class GPU infrastructure for training, fine-tuning and inference, we'd like to bring to your notice that the Python ML runtime is currently CPU-only during the Beta. We understand this can be a challenge for certain use cases, however we have some actionable tips and tricks to overcome these limitations in the interim.

Getting started

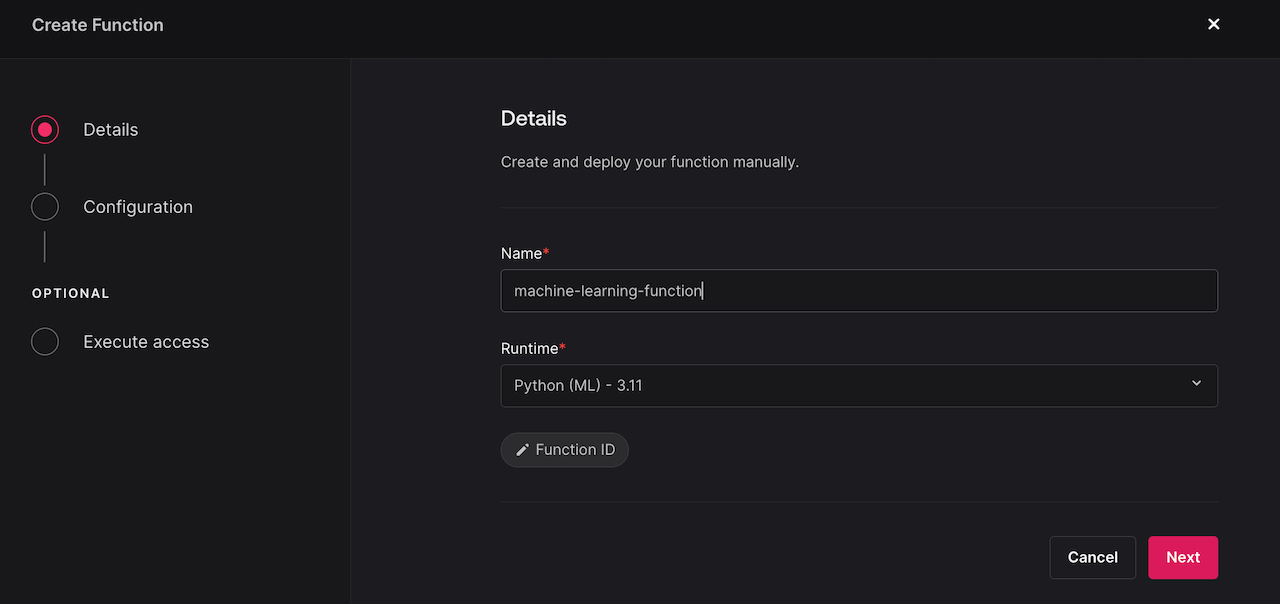

Head to the Appwrite Console then click on Functions in the left sidebar and then click on the Create Function button.

To get started with the new runtime, navigate to your Appwrite console and select Python (ML) 3.11 when creating a new function.

Follow the step-by-step wizard and create the function.

Here's an example function that trains a simple neural network on the MNIST dataset using TensorFlow and TensorFlow Datasets:

import tensorflow as tf

import tensorflow_datasets as tfds

def normalize_img(image, label):

return tf.cast(image, tf.float32) / 255.0, label

def main(context):

(ds_train, ds_test), ds_info = tfds.load(

"mnist",

split=["train", "test"],

shuffle_files=True,

as_supervised=True,

with_info=True,

)

ds_train = ds_train.map(normalize_img, num_parallel_calls=tf.data.AUTOTUNE)

ds_train = ds_train.cache()

ds_train = ds_train.shuffle(ds_info.splits["train"].num_examples)

ds_train = ds_train.batch(128)

ds_train = ds_train.prefetch(tf.data.AUTOTUNE)

ds_test = ds_test.map(normalize_img, num_parallel_calls=tf.data.AUTOTUNE)

ds_test = ds_test.batch(128)

ds_test = ds_test.cache()

ds_test = ds_test.prefetch(tf.data.AUTOTUNE)

model = tf.keras.models.Sequential(

[

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation="relu"),

tf.keras.layers.Dense(10),

]

)

model.compile(

optimizer=tf.keras.optimizers.Adam(0.001),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

model.fit(

ds_train,

epochs=6,

validation_data=ds_test,

)

return context.res.json({"success": True})

Find the full source code in our GitHub repository.

Best practices

The Python ML runtime is in beta. We plan to iterate in response to developer demand and needs. For now, the runtime is CPU-only. Here are some tips and tricks to help you overcome this limitation:

Smaller models & Tensorflow Lite

Smaller models are faster to load and execute. Avoid using large models that require a lot of memory and CPU resources.

Tensorflow Lite models are optimized for mobile and edge devices. They are smaller in size and can be run on the CPU. A typical tensorflow model can be 10x smaller when converted to a Tensorflow Lite model, with only a small loss in accuracy. You can convert your Tensorflow models to Tensorflow Lite models using the Tensorflow Lite Converter.

Async executions

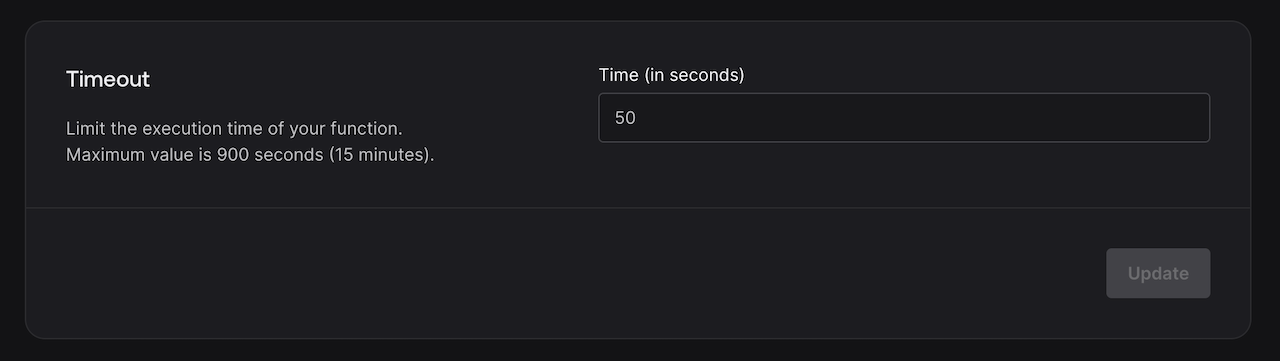

Synchronous executions have a global timeout limit of 30s on Appwrite Cloud. Therefore, it is recommended to use asynchronous function executions for long running tasks. This will allow you to run multiple tasks concurrently, set your own timeout period and make the most of the compute resources available. Create an asynchronous execution using the async parameter in the createExecution endpoint.

If you see FUNCTION_SYCHRONOUS_TIMEOUT errors when executing your function, navigate to your Function settings and try increasing your timeout period to a larger number such 50 seconds or more.

Training vs inference

The Python ML runtime is more suitable for inference tasks. Training models on the runtime is not recommended as it can be slow and resource-intensive due to it being CPU only during the beta. We recommend training your models on a local machine or a cloud-based GPU instance and then deploying the trained model to the Python ML runtime for inference.

If you have training that does not require intensive GPU resources, you can train your models during the build step of the function and then deploy the trained model to the runtime. This will allow you to run inference tasks without the need for repeated training.

Third-party integrations

For all other use-cases, you can use third-party AI services with Appwrite Functions. Perplexity, Replicate and OpenAI offer powerful APIs that can be used to train and run models on their infrastructure.

We've built a range of example functions and technical guides in our AI documention.