It is undeniable that Go has grown to become one of the most popular programming languages among developers worldwide. Recently, during Init, we announced the new Golang (or Go) runtime for Appwrite Functions. However, it is one thing for us to claim that our Go functions runtime is performant, it is a whole other thing for us to justify the same. To do so, we planned a benchmark to test the performance of our Go runtime in comparison with other Appwrite Functions runtimes.

Setting up the benchmark

To test the raw performance of our Go functions, we created several functions with different programming languages using Open Runtimes, a set of open-source serverless function runtimes developed by Appwrite. We also prepared a benchmarking system for these functions. Here’s how we achieved all of these.

Creating our benchmark test functions

We created three different types of functions to benchmark different aspects of the function runtimes’ performance.

Hello World

The Hello World function is the simplest benchmark for understanding build speed, cold-start speed, and memory consumption in a minimal function. It features a single path and responds with text to any HTTP request sent to it.

package handler

import (

"github.com/open-runtimes/types-for-go/v4/openruntimes"

)

func Main(Context openruntimes.Context) openruntimes.Response {

if Context.Req.Path == "/ping" {

return Context.Res.Text("Pong");

}

return Context.Res.Text("Hello");

}

Fibonacci

The Fibonacci benchmark allows us to understand the concurrency model of each runtime for CPU-heavy operations.

package handler

import (

"sync"

"github.com/open-runtimes/types-for-go/v4/openruntimes"

)

func fibonacci(n int) int {

if n <= 1 {

return n

}

return fibonacci(n-1) + fibonacci(n-2)

}

func Main(Context openruntimes.Context) openruntimes.Response {

if Context.Req.Path == "/ping" {

return Context.Res.Text("Pong");

}

var wg sync.WaitGroup

for i := 0; i < 10; i++ {

wg.Add(1)

go func() {

defer wg.Done()

fibonacci(30)

}()

}

wg.Wait()

return Context.Res.Text("OK")

}

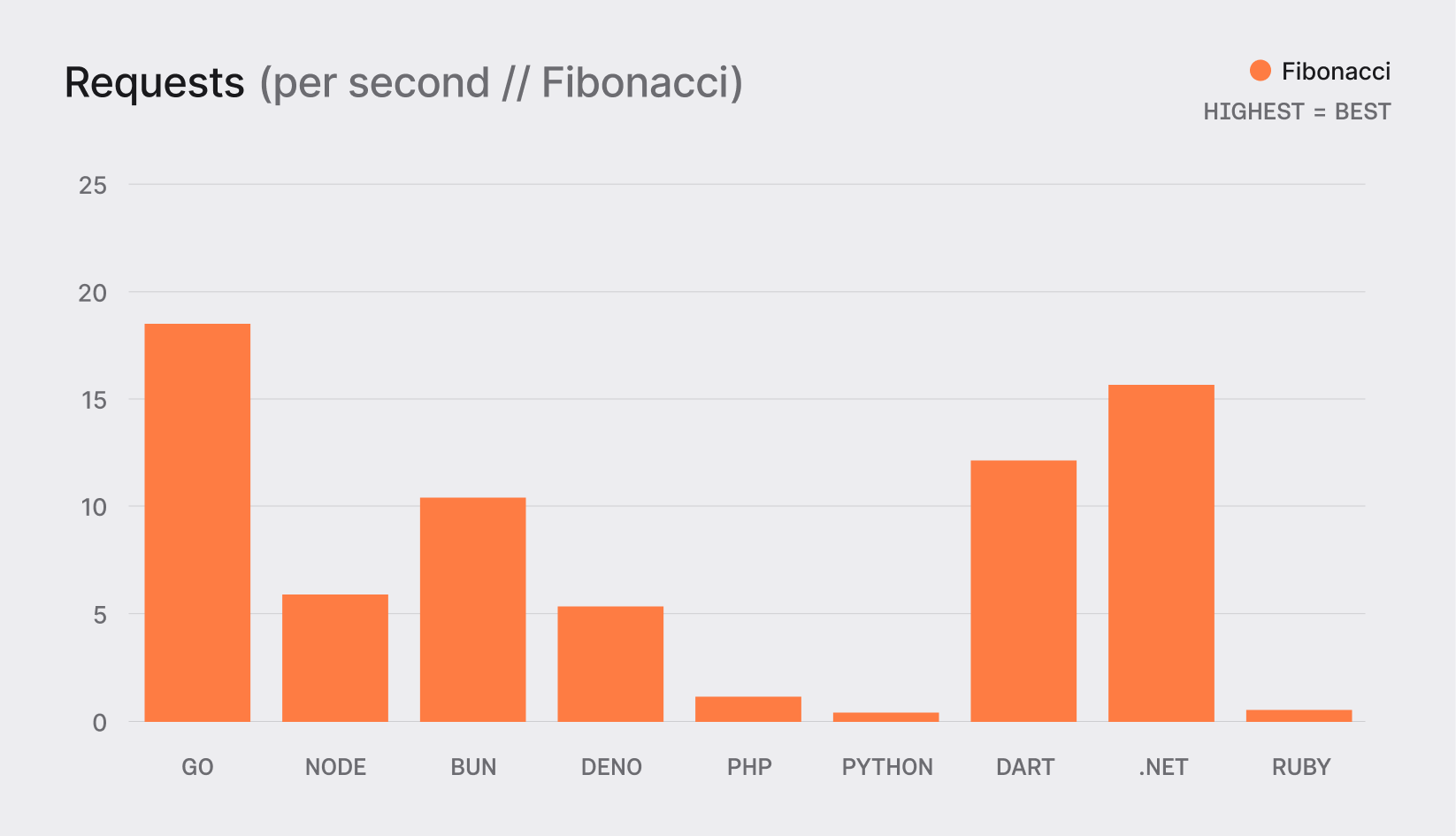

An important note is that certain runtimes may feature worse performance than others, implying that they likely didn't use all CPU cores. This can be achieved with additional code, but the benchmark is designed to focus on the runtime's native approach.

Scraper

The Scraper benchmark tests the build speed and cold-start speed of a bigger function with multiple libraries.

package handler

import (

"bytes"

"github.com/open-runtimes/types-for-go/v4/openruntimes"

"github.com/go-resty/resty/v2"

"github.com/go-faker/faker/v4"

"github.com/PuerkitoBio/goquery"

)

type FakerTags struct {

Name string `faker:"name"`

}

func Main(Context openruntimes.Context) openruntimes.Response {

if Context.Req.Path == "/ping" {

return Context.Res.Text("Pong");

}

if Context.Req.Path == "/faker" {

fakeData := FakerTags{}

err := faker.FakeData(&fakeData)

if err != nil {

return Context.Res.Text(err.Error(), Context.Res.WithStatusCode(500))

}

return Context.Res.Text("<body><h1 id=\"title\">" + fakeData.Name + "</h1></body>");

}

client := resty.New().SetCloseConnection(true)

resp, err := client.R().Get("http://127.0.0.1:3000/faker")

if err != nil {

return Context.Res.Text(err.Error(), Context.Res.WithStatusCode(500))

}

reader := bytes.NewReader(resp.Body())

doc, err := goquery.NewDocumentFromReader(reader)

if err != nil {

return Context.Res.Text(err.Error(), Context.Res.WithStatusCode(500))

}

data := doc.Find("#title").Text()

return Context.Res.Text(data);

}

Some interpreted languages may underperform in this benchmark, and this could be solved by introducing a library with a build step to minify the code into a single file. However, this benchmark focuses on the runtime’s native approach, so these improvements were not made.

You can find the benchmark functions for all the tested languages in this GitHub repo.

Preparing the benchmarking system

For our benchmarking system, we created a VM on DigitalOcean with the following specs:

8 Intel vCPUs

16 GB RAM

480 GB Disk memory

Ubuntu 24.04 (LTS) x64 OS.

We then installed Docker to run the functions, hyperfine to benchmark the function builds and cold starts, and k6 to benchmark the function executions (view the preparation instructions).

Our scripts to set up and run the benchmarks are available in our GitHub repo.

Running the benchmarks

Through the benchmarks, we explored several aspects of the function runtimes.

Builds

First, we observed the builds of our function. We reviewed both build sizes and build times, and here is what we discovered:

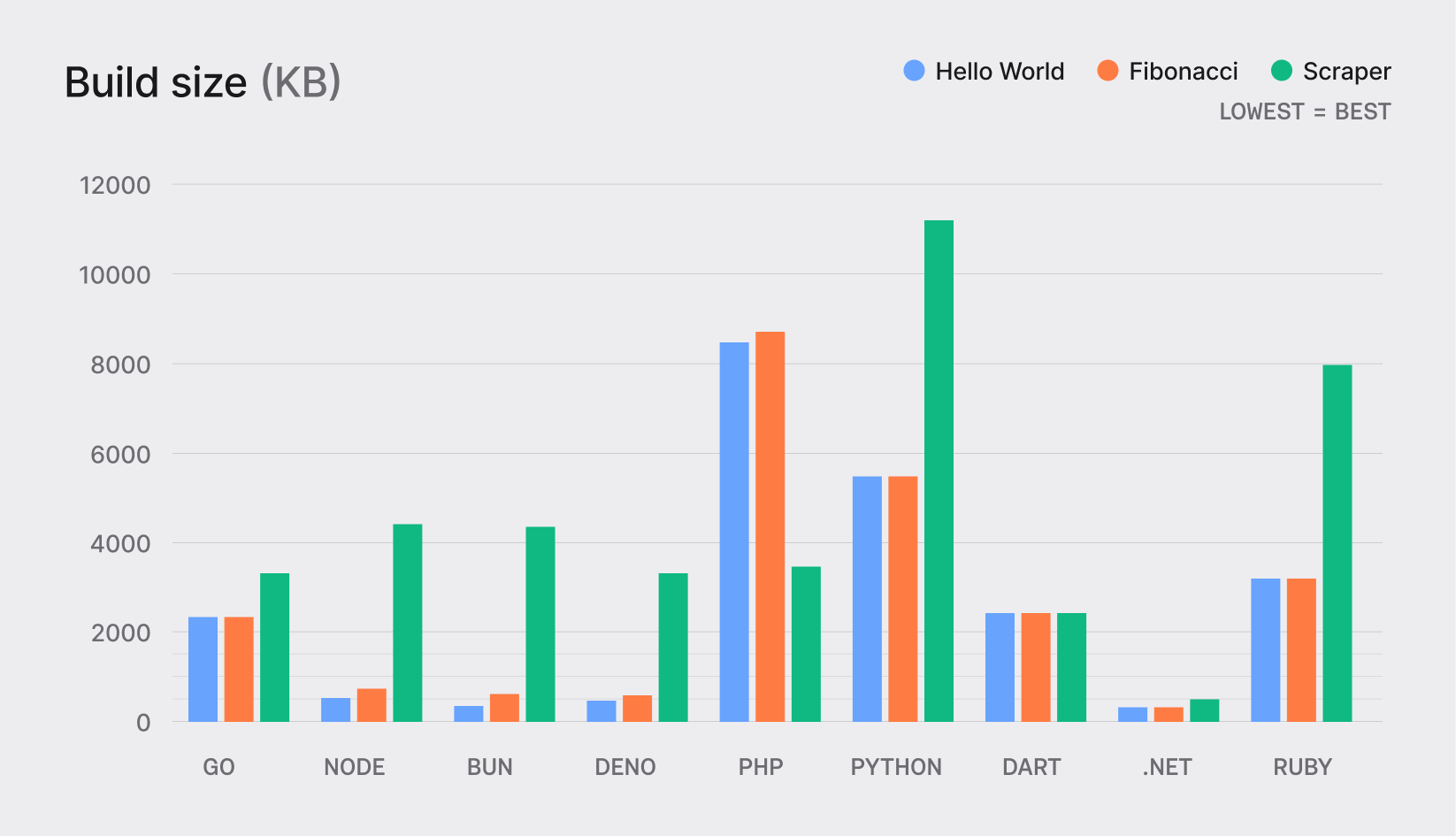

Build size

In the simpler functions with fewer dependencies (Hello World and Fibonacci), Go had a larger build size than Node, Bun, Deno, .NET, and Dart. For the Scraper function, Go remained competitive and had a smaller build size than Node, Bun, Python, and Ruby.

Interestingly, .NET had the smallest build size across all the benchmark functions compared to Go and other runtimes.

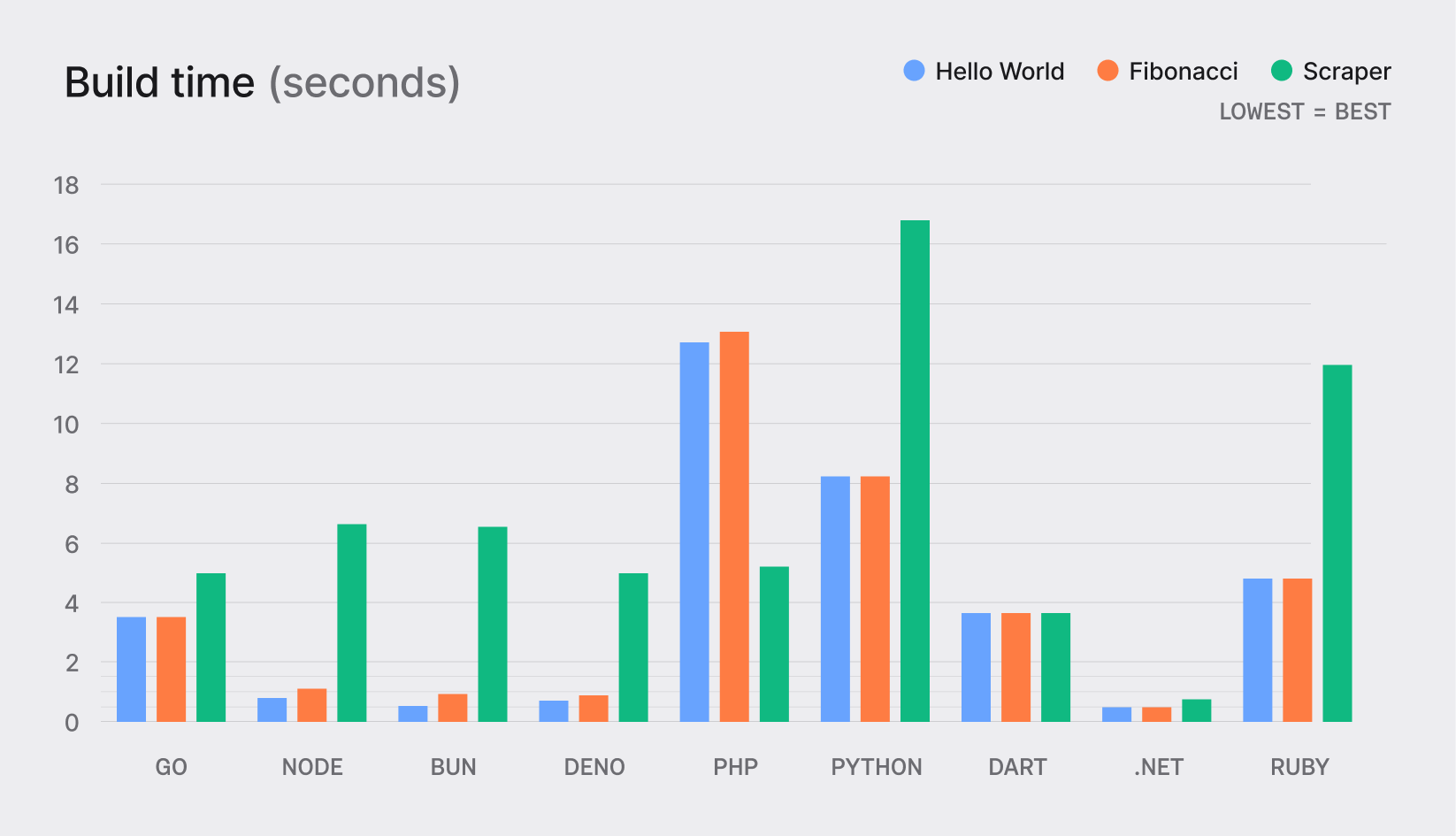

Build time

Overall, Go was a stable choice with moderate performance. It was not the fastest, but it offered consistent build times as compared to runtimes like Python, Dart, and Ruby.

We must give special shoutouts to Bun and PHP, which showed exceptionally fast built times as compared to any other runtime. These distinctions were even more visible in the Scraper function, showcasing the efficiency of their package managers.

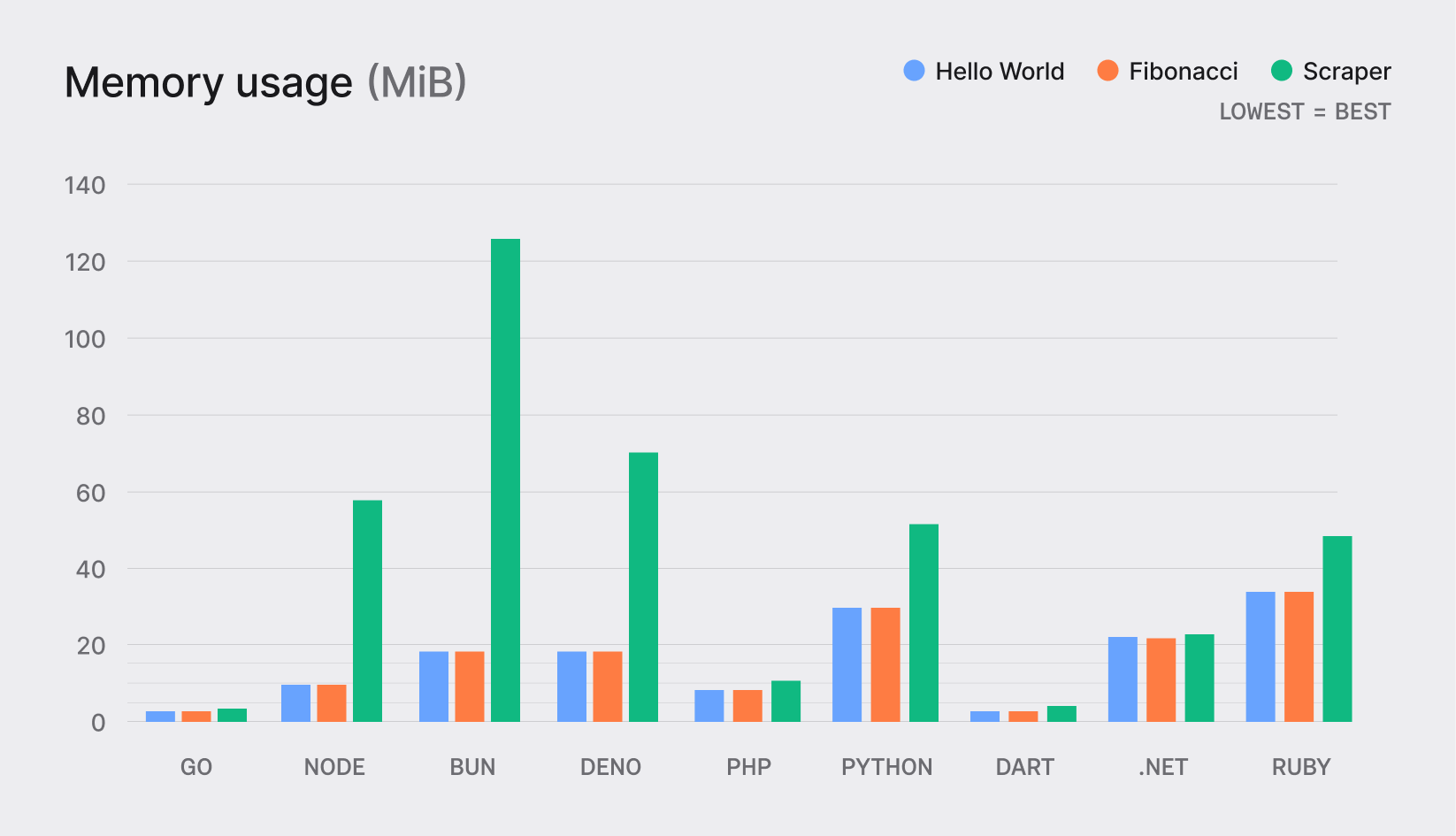

Memory usage

We observed the memory usage of each function before running the benchmarks (i.e., as soon as the functions were set up). Across all three functions, Go consistently used less memory, making it our most suitable function runtime for applications where memory resources are limited, followed by Dart as our next best option.

Our JavaScript runtimes, Python, Ruby, and .NET, have higher base memory usage, which can be a downside for some. However, we noticed that the memory usage of our .NET function did not increase much with the addition of libraries (in the Scraper function), so that might be the best candidate from the aforementioned list.

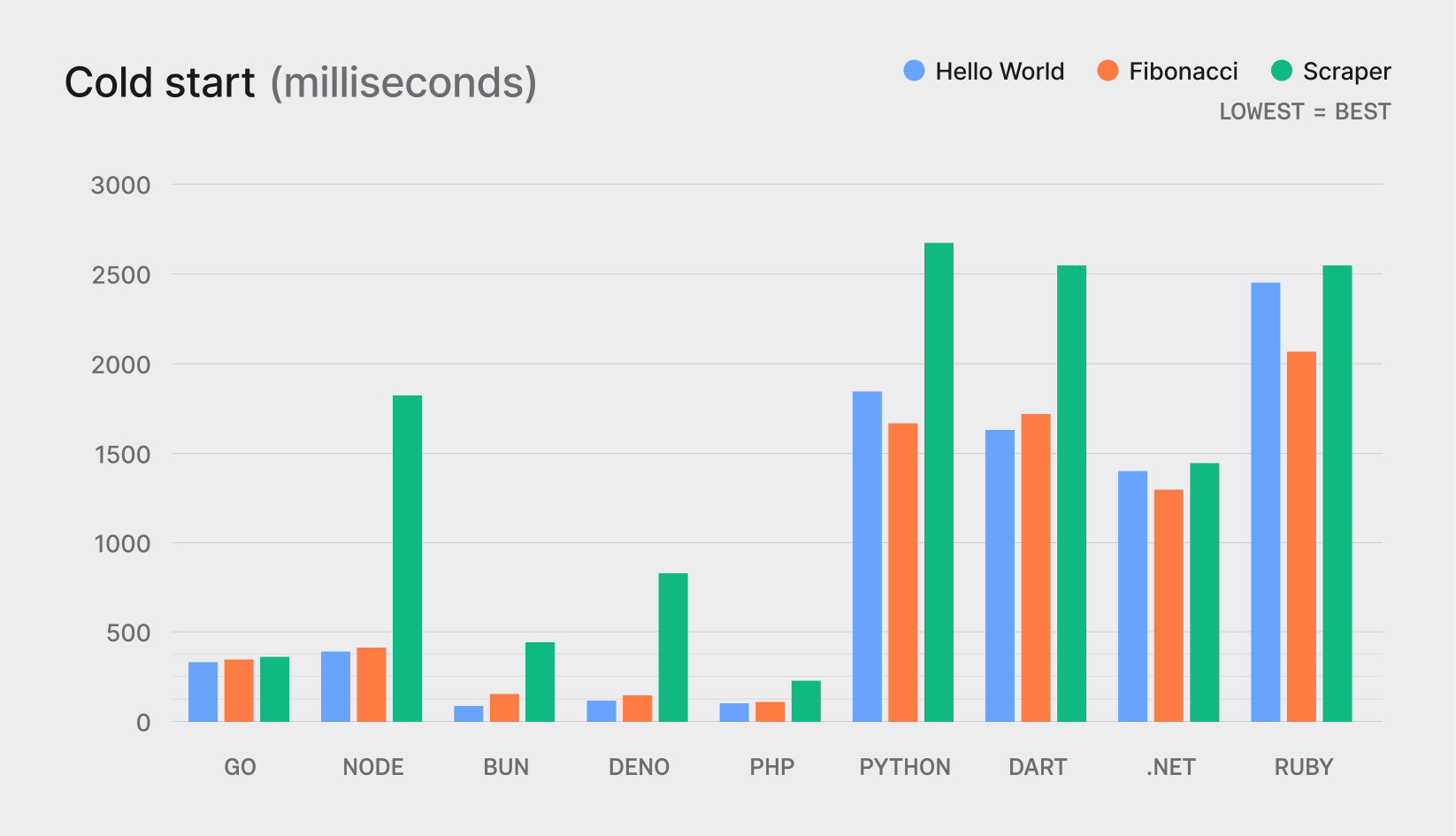

Cold-starts

In our benchmark to verify the lowest cold starts, Go emerged as one of the fastest runtimes, particularly when compared to Python, Ruby, and .NET, which showed significantly higher cold-start times. Go's performance was notably better for more complex functions like Scraper, suggesting its suitability for more complex projects with multiple dependencies.

As observed, PHP was the best choice for very simple, quick-execution tasks, with the best cold-start times in both the Hello World and Fibonacci functions. Among the compiled runtimes, Dart showed similar performance to PHP in the Hello World and Fibonacci functions with better performance in the Scraper function, making it a more suitable alternative to Go.

Function executions

Next, we tested warmed functions to determine their throughput and the runtimes' ability to handle multitasking. Some runtimes only allow single-threaded operations out of the box, and could show improved performance by installing relevant libraries; however, the benchmarks were intended to demonstrate the runtimes’ native performance.

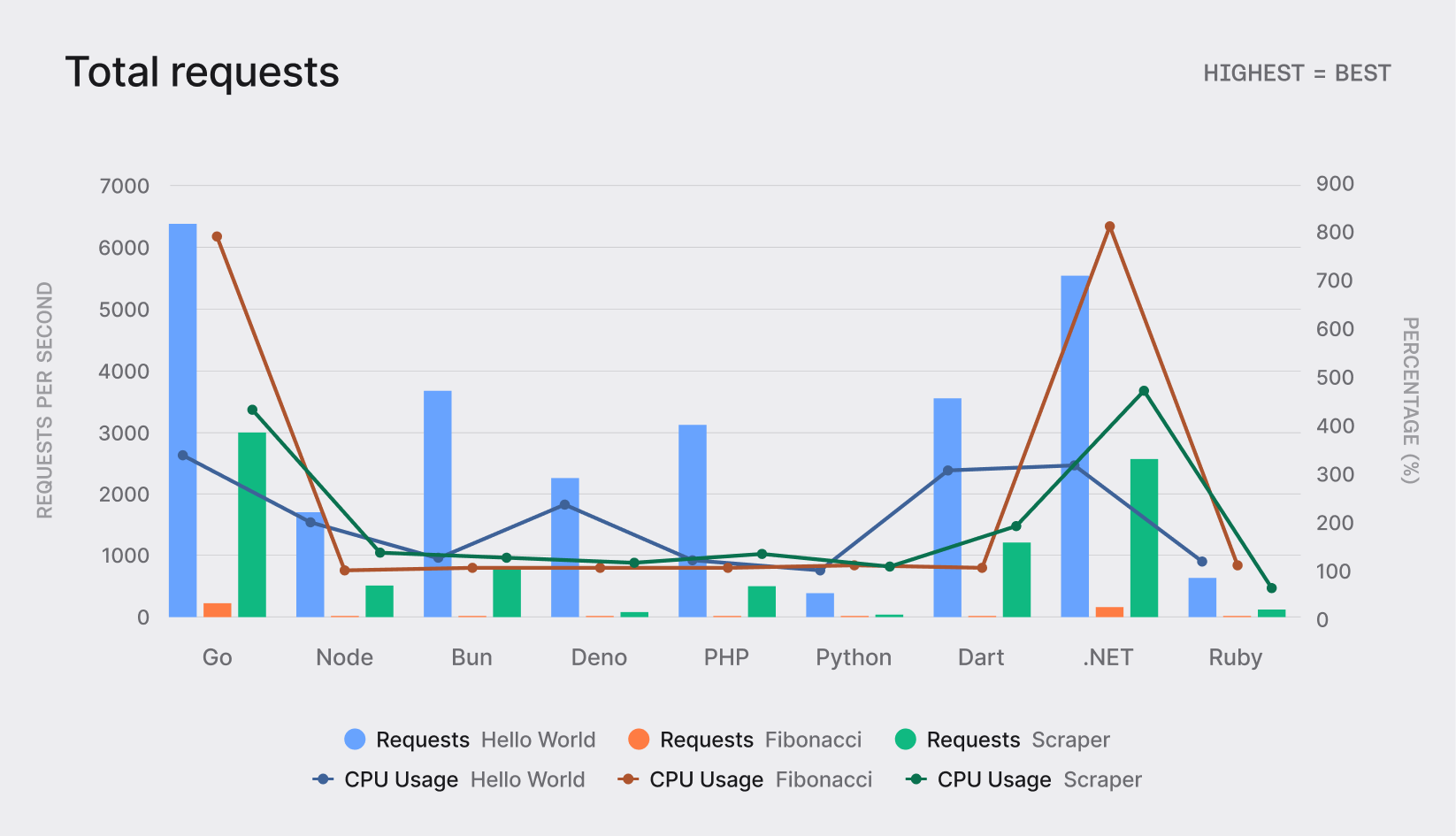

Total requests per second

Go consistently showed the highest performance in requests per second across all benchmarks and with higher CPU usage, indicating that it effectively utilizes multiple cores for both I/O-bound and CPU-bound tasks.

Additionally, .NET was the only runtime other than Go that was able to utilize all CPU cores natively, i.e., without the need to use special libraries or frameworks. Python happened to be an outlier with substantially low throughput; however, this could have been caused by the runtime environment and can be optimized in the future.

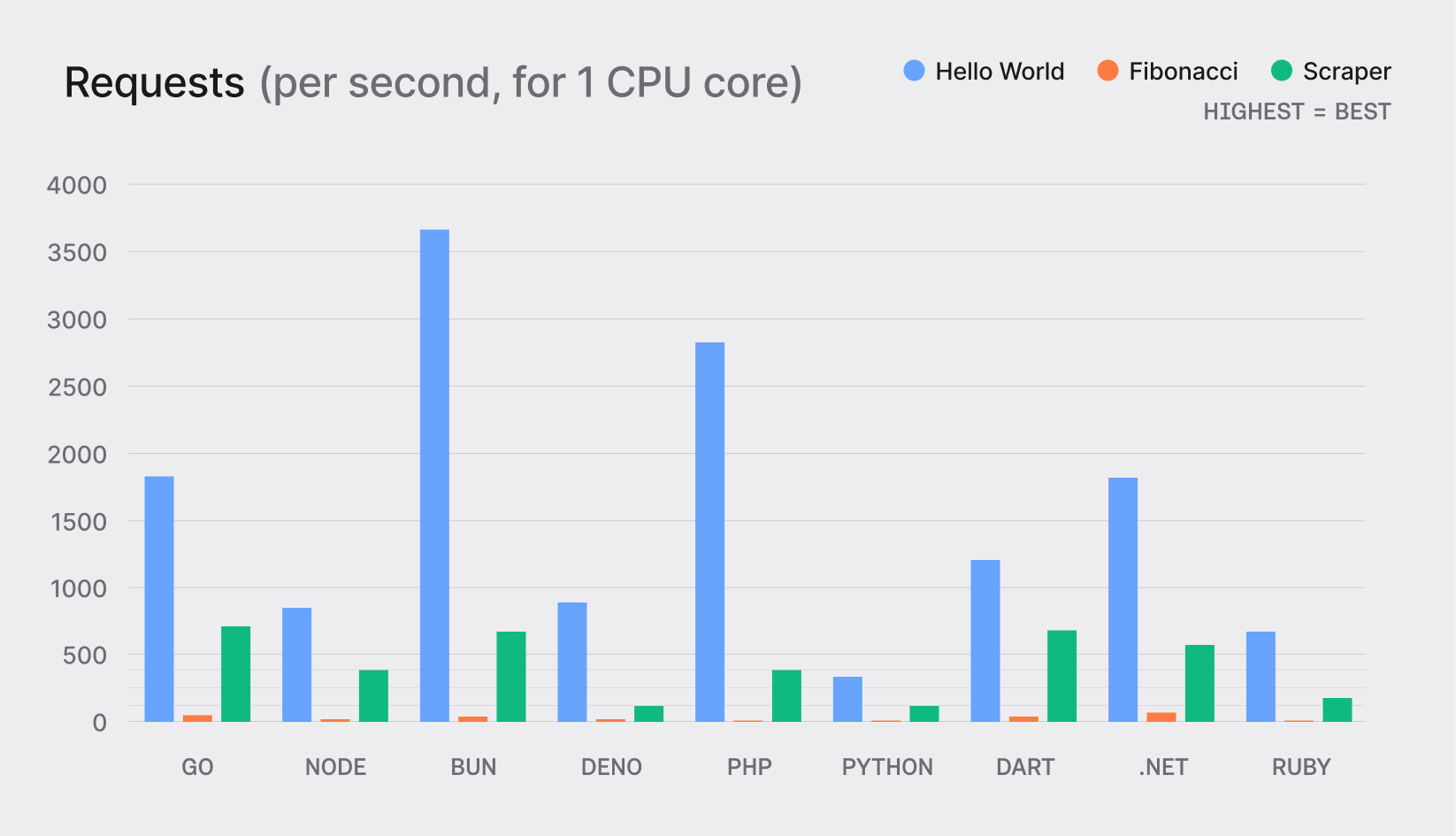

Requests per second for a single CPU core

We also compared each runtime's single-core performance. Go showed balanced performance across different scenarios, excelling particularly in tasks involving high computational needs (e.g., the Fibonacci calculation).

While it was not always the top performer in every scenario (Bun, PHP, and .NET demonstrated better performance in the Hello World function), Go's consistency across different types of workloads showed its versatility and reliability.

The data for all the graphs shared above is available in our GitHub repo.

Conclusion

These benchmarks consistently showed us why the Go runtime is one of the best runtimes for Appwrite Functions. It is highly performant, multitasks well, and is highly memory efficient. If you liked these benchmarks, you can find our scripts and test functions for the same in our GitHub repo.

Learn more about Appwrite Functions and Go by reading: